If your business uses the messaging channel in any capacity, you’ve heard the buzz: RCS for Business is coming. Or, depending on who you ask, RCS for Business is here. The truth is somewhere in the middle. Brands are experimenting, carriers are evolving, and consumers are still enjoying the novelty of shopping straight from their SMS inboxes.

Which means now is actually the right time to A/B test RCS against SMS—as long as you know how to do it.

Let’s start with a tactical guide to help you set up meaningful tests, interpret them correctly, and avoid stepping into a few predictable potholes.

What makes RCS vs SMS worth testing?

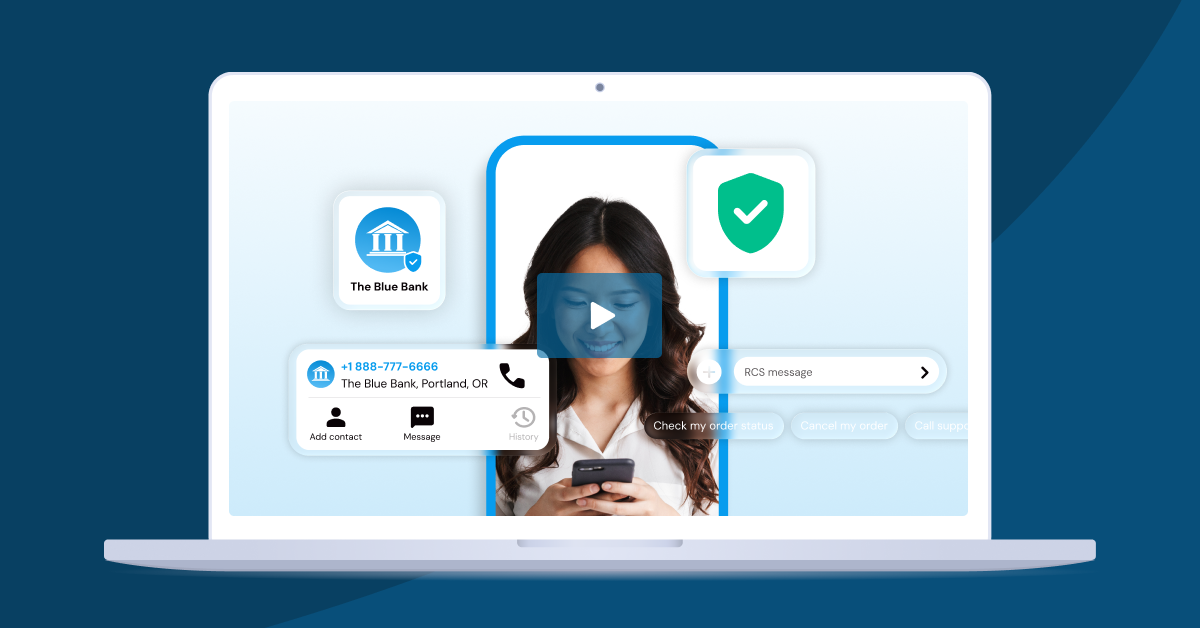

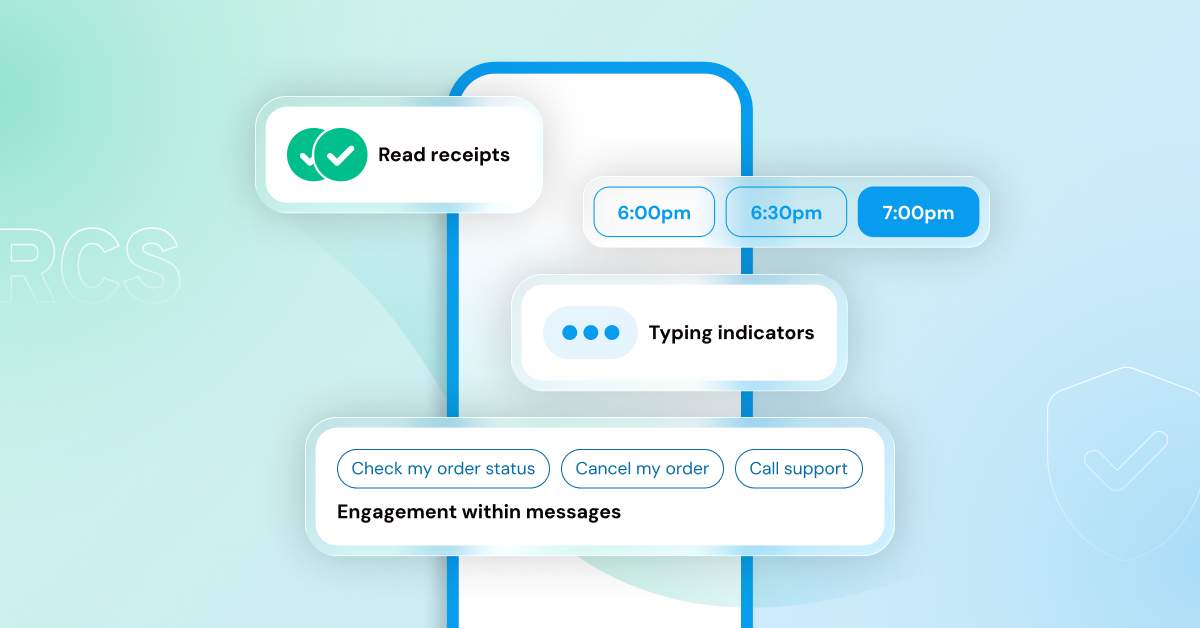

RCS isn’t just SMS with a fresh coat of paint. It’s a richer messaging framework that allows for:

- Buttons and suggested replies

- Carousels

- Verified branding

- Images and videos without MMS workarounds

- Read receipts and delivery indicators

In short, RCS is shaping the future of messaging to become more interactive, and engaging. That said, elevated features alone aren’t a reason to invest. You need to build a business case that RCS can lift conversions, engagement, or customer satisfaction in your funnel. And the only way to find out is to run a fair test.

Before you test: Be aware of a few realities

- Not everyone might receive RCS. While virtually all smartphones in the US can receive an RCS message, there still remains a margin for error. RCS delivery still depends on handsets that have been updated to a recent OS (within the last year, roughly) and are connected to data.

- Your fallback matters. If you send RCS but your user can only receive SMS, what do they get? A barren message? A functional but plain text fallback? This affects how you measure success.

- RCS results can look inflated—because richer messages naturally drive more engagement. Make sure this lift is actually meaningful to your bottom line by connecting those interactions to measurable returns, not just vanity metrics.

- Test environments aren’t always fully standardized. Some carriers may render RCS slightly differently. It’s early days, which means some inconsistency is to be expected.

Setting up the test

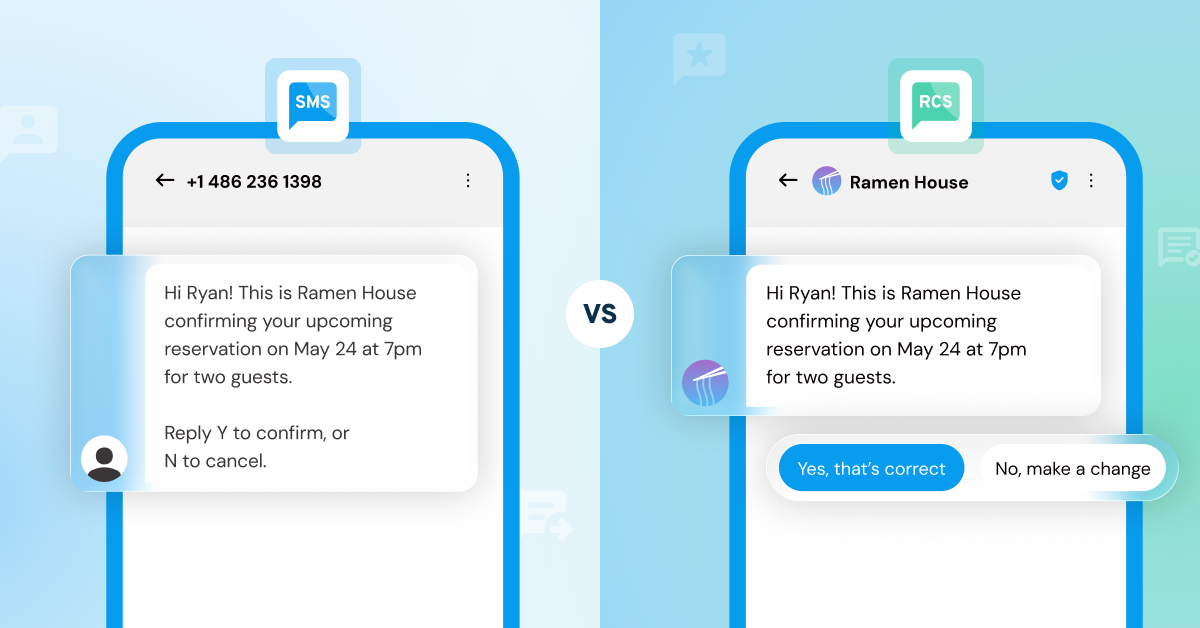

1. Pick the right use case

When you’re testing RCS vs SMS, start with messages that fall back cleanly—think simple, text-based updates your customers already know. That way, the only real differences you’re testing are the fun RCS perks: a verified sender badge and tap‑friendly suggested replies instead of old‑school ‘Reply Y.’

Ideal use cases could look like:

- Appointment confirmations

- Scheduling flows

- Order confirmations

- Subscription renewals

- Prescription ready-for-pickup alerts

- Basic customer support prompts (“Need help? Reply YES to connect”)

Keeping the use case this tight makes it easy to see whether RCS features actually move the needle, and it keeps the lift light for teams deciding whether the upgrade is worth it.

2. Build two experiences that are equally thoughtful

Your test is rigged right off the bat if your RCS message is decked out with carousels and interactive buttons, while your competing SMS is essentially “Hi pls click link.”

Instead, build an SMS version that is optimized for SMS with:

- Clean copy

- Clear CTA

- Link that lands the user exactly where they need to be

- No fluff, no walls of text

Then build your RCS version with functional enhancements like:

- Suggested reply buttons instead of long URLs

- A carousel instead of a paragraph of description

- A branded, verified profile (if possible)

If SMS is your control parameter, don’t sandbag it. You want a fair fight between SMS vs RCS.

3. Define success before you hit ‘Send’

This is where most tests stumble—you need metrics that reflect business impact, and can be measured consistently between communication channels.

✅ Good metrics might include:

- Conversion rate

- Time-to-action

- Appointment completion

- Cart recovery

- Revenue per message (for promotions)

❌ Less helpful metrics include:

- “People said it looked cool.”

- “We saw more taps but they didn’t lead anywhere.”

- “Our team liked RCS better.”

Tie your KPIs to the part of your funnel that actually matters. RCS should lift real outcomes, not just sentiment.

4. Think about timing and cadence

When it comes to time-boxing your test, the sweet spot is going to depend on what is a meaningful period for your use case:

- For transactional use cases like notifications or confirmations, a single message cycle tested across a wide audience may be all you need to create meaningful results to study.

- For a more complex, interactive marketing campaign, you should plan to execute a full campaign cycle of messages across a smaller base of end users and review the results.

Never make decisions based on a single send. Messaging behavior is noisy—day of week, holidays, and promotions can all skew results.

5. Monitor the fallback

Here’s the biggest threat to your RCS test: your fallback strategy.

If someone can’t receive your RCS message, what happens?

- They get an SMS fallback (most common)

- They get nothing (disaster!)

If your fallback experience is worse than your SMS control version, your results will be skewed. Keep fallback messaging clean and as close to the SMS control as possible.

6. First, land, and then expand

RCS is still in its growth stage. So start your test with manageable parameters before you scale them to make broader investment decisions.

Start with:

- A single use case

- A manageable audience

- A simple experiment

Then expand to new journeys, new messages, and broader audiences once you see consistent results.

Common risks (And how to dodge them gracefully)

- Inflated metrics due to novelty

Users may interact more simply because the message looks ‘new.’ Run longer tests to see if the lift holds. - Over-engineering your RCS

If the user needs to swipe, tap, scroll, choose, confirm, opt in, and then tap again—you’ve replaced simplicity with a UX labyrinth. - Measuring too many variables at once

Keep your SMS vs RCS differences minimal enough that you can attribute lift to the channel, not a dozen creative changes. - Using RCS where it doesn’t help

Just because you can add a carousel doesn’t mean you should. Rich messaging should reduce friction, not add distractions.

Interpreting the results

At the end of your test, ask:

- Did RCS improve the journey in a way that creates actual business value?

- Did it reduce friction for the customer?

- Did it shorten the time to conversion?

- Did it drive measurable uplift compared to SMS?

If the answer is yes, RCS may be worth rolling out more widely.

If the answer is “kinda,” “maybe,” or “not really,” then keep iterating. RCS is new enough that optimizing is worth it.

Remember: It’s still early days

RCS adoption is growing fast, but the ecosystem is still evolving. Carrier coverage, campaign registration flows, and feature parity aren’t fully universal.

That means:

- Tests should be run fairly

- Results should be interpreted with context

- Rollouts should happen in phases

SMS isn’t going anywhere. RCS for Business is simply joining the stage and making more possible with our business messages.

The bottom line

If you’re curious about whether RCS can outperform SMS in your customer journeys, A/B testing is the most reliable way to find out. Keep the test controlled, the metrics meaningful, the audience randomized, and the expectations realistic.

A note to yourself: Interactive features are fun, but the real marker of success is value delivered—to your business and your customers.

Set up your RCS vs SMS test with messaging experts.