Voice AI has evolved from a novelty into a critical component of modern user interfaces. We’ve moved from rigid, pre-programmed commands to natural conversations with machines. Yet, for many developers and businesses, building an integrated voice agent remains a challenge, often locked into specific platforms and brittle integrations.

The breakthrough lies not just in making AI models smarter, but in how models connect to the world. Enter the Model Context Protocol (MCP), a rapidly growing standard that is revolutionizing how we build and scale voice AI by fundamentally changing its ability to access external services and data.

Let’s take a look at how MCP solves a critical integration challenge in voice AI, connecting agents with your business’s data and APIs, transforming voice commands into dynamic conversations.

What is Voice AI? A quick refresher

At its core, Voice AI is the technology that allows machines to understand and respond to human speech using a combination of models, shown below.

| Model | Purpose | In other words… |

| Automatic Speech Recognition (ASR) | Converts spoken audio into text | What did the user say? |

| Natural Language Understanding (NLU) | Derives meaning and intent from converted text | What does the user want? |

| Large Language Model (LLM) | Decides on the appropriate response or action | What should we do about it? |

| Text-to-Speech (TTS) | Converts the response back into natural-sounding speech | How do we say it back? |

The recent leap in LLM capabilities has supercharged the ‘reasoning’ layer. Voice agents are no longer limited to dictating a text message or playing music. They are now powering intelligent virtual assistants in customer service, healthcare, and enterprise environments. For example, businesses are quickly replacing frustrating “press 1″ menus with advanced Conversational IVRs. You’ve probably already heard a prompt in your own office, like “Hey, pull up the Q3 sales dashboard on the main screen.”

Despite these advances, integrating agents with external systems can create major bottlenecks. Engineers often use custom integrations to connect their agent with a database, CRM, or SaaS application, but this isn’t scalable. Hard-coded solutions for every use case creates a maintenance nightmare.

What is Model Context Protocol (MCP)?

In simple terms, MCP is a standardized protocol that allows an AI application (like a voice agent) to dynamically discover external tools and data sources.

Think of it as a universal USB-C port for AI. Just as your laptop uses a single, standard port to connect to monitors, storage, and network, an MCP-enabled voice agent uses this single protocol to connect to the growing list of services that support MCP, like Salesforce, Bandwidth, or Stripe. Developers expose capabilities as MCP tools, resources, and prompts for any client (e.g., an AI assistant) to discover and use.

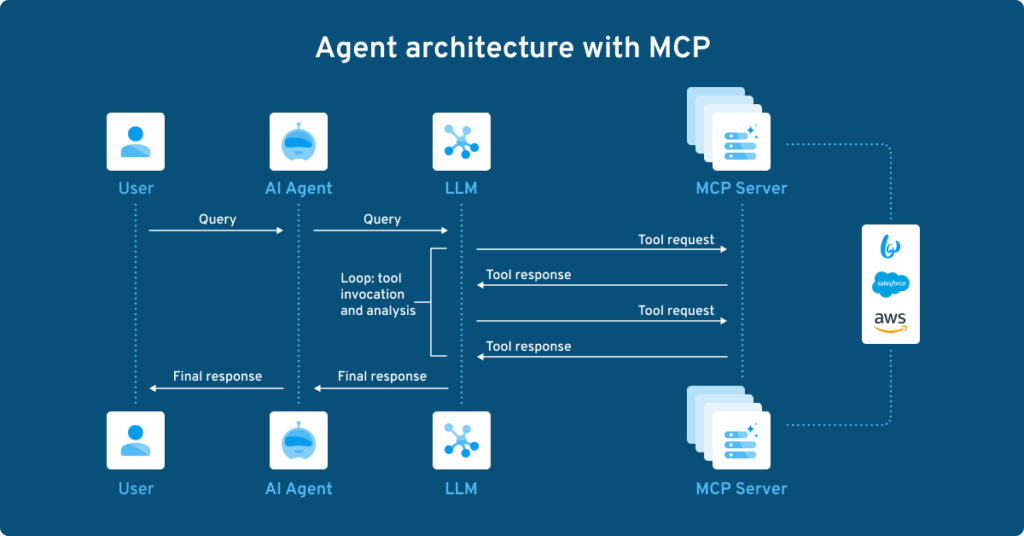

MCP works through a simple, powerful client-server partnership:

Servers: Think of these as specialised translators for each of your business systems—one for your customer database, another for your calendar, and another for your CPaaS provider. Each translator knows how to ask its system a question and understand the answer.

Host: This is the application users interact with; this could be the LLM In other words, the host is the “brain” powering the conversation.

Clients: The host opens connections to servers via client instances, allowing the host to interact with multiple external services simultaneously.

So, when a user says, “Please find the latest order for Acme Corp and text me an update,” the voice agent intelligently figures out the steps:

- Connect to one or more servers with database and messaging tools

- Execute the provided tools to find the customer and their latest orders

- Send a text message to the user

MCP is the secure pathway that lets this entire conversation happen smoothly and automatically.

Why is MCP a game-changer for Voice AI?

Integrating MCP with your voice AI stack yields transformative results:

Support dynamic workflows

The voice agent isn’t limited to a pre-defined script. It can dynamically compose a sequence of tool calls on the fly based on the user’s request, enabling next-level automation (.e.g., CRM lookup, DB query, ticket creation, sending an SMS).

Secure tool access

MCP supports OAuth 2.1 for authentication, allowing agents to authorize user requests to APIs or services. Implemented correctly, this prevents a server with admin-level permissions from inadvertently granting a user access beyond their assigned role.

Scalable and maintainable integrations

Adding a new tool (like Slack) doesn’t require rewriting your voice agent. Developers simply stand up a new MCP Server for that tool, and the agent can immediately discover and use it on the fly.

Voice AI example

Imagine an AI customer support agent. You’ve probably experienced chatbots with limited functionalities and predetermined responses, creating a frustrating experience that almost always ends with, “Can I please speak to a human?”

An agent powered by large language models and MCP unlocks a new level of automated support. Instead of relying on its own knowledge, it acts as a central orchestrator. When a user asks a question, this assistant figures out which servers are needed to find the answer. Adding a skill doesn’t require changing the architecture of the agent—the agent can hold connections to multiple MCP servers which can be maintained independently. Then, at runtime, the agent can make specific queries and get back structured data in return.

The agent then takes all these pieces of information, analyzing and combining them into a clear response for the user. In this case, MCP is the bridge that allows the agent to coordinate multiple helpers and handle countless unforeseen queries, as long as the tools are available. Since functionality is spread out across specialized servers, each skillset can be fine-tuned and expanded as needed, boosting flexibility and scalability.

What does this mean for the future of AI development?

Voice AI is moving from one-off automations to agentic ecosystems that blend real-time speech, standardized tool calling, and governed access to enterprise services, with MCP providing the translation layer that makes this reliable and scalable. For businesses, this means faster development, more powerful workflows, and a future-proof architecture. For developers, it means less time writing integrations and more time designing intelligent user and agent experiences.

References:

Introducing gpt-realtime, OpenAI.com

Getting started, modelcontextprotocol.io